Stephen Hawking, Bill Gates and now Demis Hassabis of Google’s DeepMind have all warned of the dangers of artificial intelligence (AI), urging that we put ethical controls in place before it’s too late.

But they have all mistaken the threat: the AI we have let loose is already evolving for its own benefit.

It’s easy to imagine that humans will build ever more clever computers; machines that will end up smarter than we are. Once they pass this point and achieve “superintelligence”, whether trapped inside boxes or installed in roving robots, they can either help or turn against us. To avoid this nightmare, the Oxford philosopher Nick Bostrom says we must instil the superintelligence with goals that are compatible with our own survival and wellbeing. Hassabis, too, talks about getting “a better understanding of how these goal systems should be built, what values should the machines have”. They may not be dealing with threatening robots, but they are still talking about actual “machines” having values.

But this is not where the threat lies. The fact is that all intelligence emerges in highly interconnected information processing systems; and through the internet we are providing just such a system in which a new kind of intelligence can evolve.

Our future role in this machine? We might be like the humble mitochondrion, which supplies energy to our body’s cells

This way of looking at AI rests on the principle of universal Darwinism – the idea that whenever information (a replicator) is copied, with variation and selection, a new evolutionary process begins. The first successful replicator on earth was genes. Their evolution produced all living things, including animals, whose intelligence emerged from brains consisting of interconnected neurons. The second replicator was memes, let loose when humans began to imitate each other. Imitation may seem a trivial ability, but it is very far from that. An animal that can imitate another brings a new level of evolution into being because habits, skills, stories and technologies (memes) are copied, varied and selected. Our brains had to quickly expand to handle the rapidly evolving memes, leading to a new kind of emergent intelligence.

The third replicator is, I suggest, already here, but we are not seeing its true nature. We have built machines that can copy, combine, vary and select enormous quantities of information with high fidelity far beyond the capacity of the human brain. With all these three essential processes in place, this information must now evolve.

Google is a prime example. Google consults countless sources to select material copied from servers all over the world almost instantly. We may think we are still in control because humans designed the software and we put in the search terms, but other software can use Google too, copying the selected information before passing it on to yet others. Some programs can take parts of other programs and mix them up in new ways. Just as in biological evolution, most new variants will fizzle out, but if any arise that are successful at getting themselves copied, by whatever means, they will spread through the wonderfully interconnected systems of machines that we have made.

Replicators are selfish by nature. They get copied whenever and however they can, regardless of the consequences for us, for other species or for our planet. You cannot give human values to a massive system of evolving information based on machinery that is being expanded and improved every day. They do not care because they cannot care.

I refer to this third replicator as techno-memes, or temes, and I believe they are already evolving way beyond our control. Human intelligence emerged from biological brains with billions of interconnected neurons. AI is emerging in the gazillions of interconnections we have provided through our computers, servers, phones, tablets and every other piece of machinery that copies, varies and selects an ever-increasing amount of information. The scale of this new evolution is almost incomparably greater.

Hassabis urges us to debate the ethics of AI “now, decades before there’s anything that’s actually of any potential consequence or power that we need to worry about, so we have the answers in place well ahead of time”. I say we need to worry right now and worry about the right things. AI is already evolving for its own benefit – not ours. That’s just Darwinism in action.

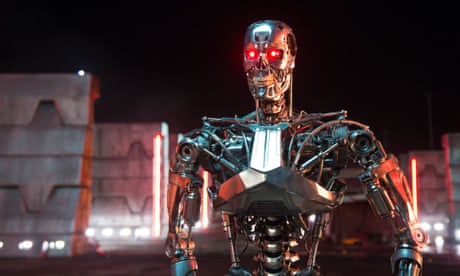

AI: will the machines ever rise up?

Could such a system become artificially intelligent? Given that the natural intelligence we know about emerged from highly interconnected evolving systems, it seems likely if not inevitable. This system is now busy acquiring the equivalent of eyes and ears in the millions of CCTV cameras, listening devices and drones that we are happily supplying. We do all this so willingly, apparently oblivious to the evolutionary implications.

So what might we expect of our future role in this vast machine? We might be like the humble mitochondrion, which supplies energy to all our body’s cells. Mitochondria were once free-living bacteria that became absorbed into larger cells in the process known as endosymbiosis; a deal that benefited both sides.

As we continue to supply the great teme machine with all it needs to grow could we end up like this, willingly going on feeding it because we cannot give up all the digital goodies we have become used to?

It’s not a superintelligent machine that we should worry about but the hardware, software and data we willingly add to every day.